The Dawn of Computing: Early Processor Beginnings

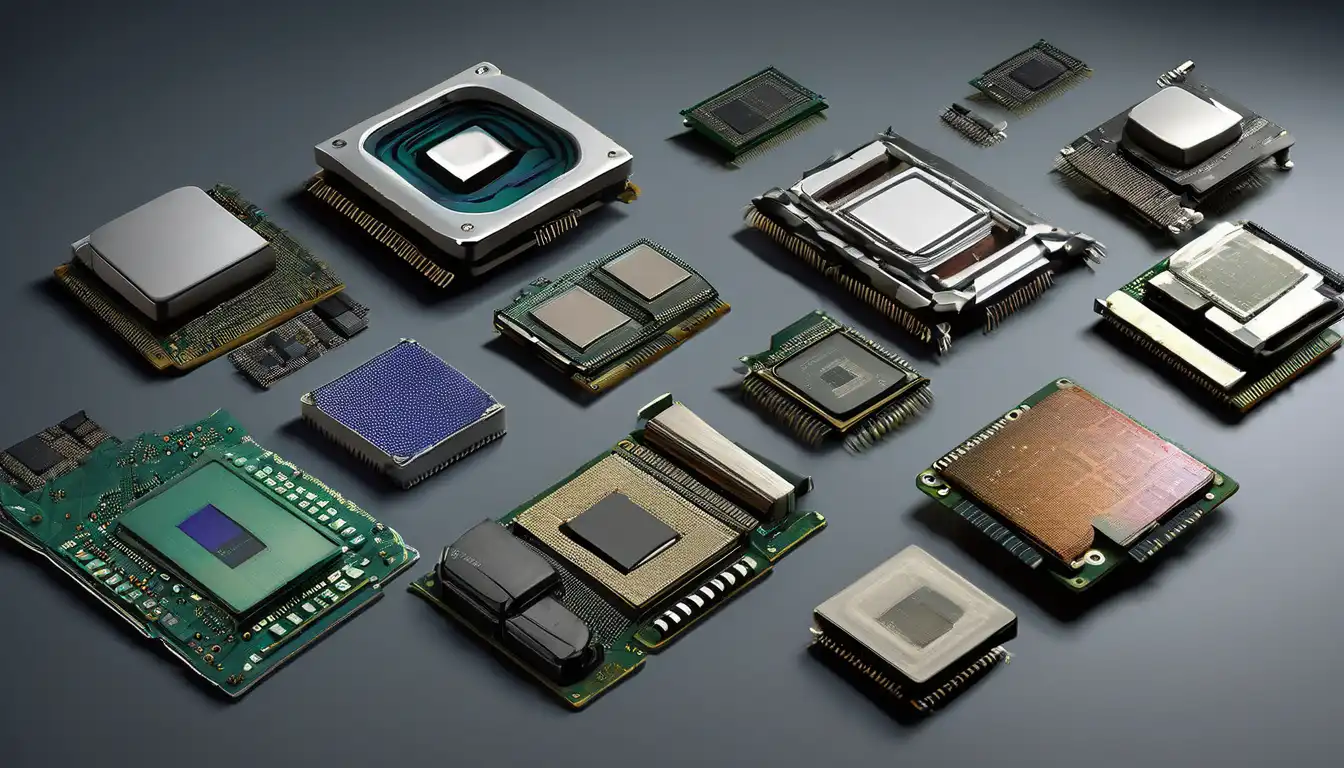

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with massive vacuum tube systems that occupied entire rooms, processors have transformed into microscopic marvels that power everything from smartphones to supercomputers. This transformation didn't happen overnight—it's a story of continuous innovation spanning nearly eight decades.

In the 1940s, the first electronic computers used vacuum tubes as their primary processing components. These early processors, like those in the ENIAC computer, contained approximately 17,000 vacuum tubes and consumed enough electricity to power a small town. Despite their limitations, these pioneering systems laid the foundation for modern computing and demonstrated the potential of electronic data processing.

The Transistor Revolution

The invention of the transistor in 1947 marked a pivotal moment in processor evolution. These semiconductor devices were smaller, more reliable, and consumed significantly less power than vacuum tubes. By the late 1950s, transistors had become the standard building blocks for computer processors, enabling more compact and efficient computing systems.

The transition to transistor-based processors allowed computers to become more accessible to businesses and research institutions. Systems like the IBM 1401, introduced in 1959, brought computing power to medium-sized organizations for the first time. This era saw processors becoming more specialized, with distinct units for arithmetic operations, control functions, and memory management.

The Integrated Circuit Era

The development of integrated circuits in the 1960s represented another quantum leap in processor technology. Jack Kilby and Robert Noyce independently developed methods for integrating multiple transistors onto a single silicon chip, creating the first microchips. This innovation dramatically reduced the size and cost of processors while improving their reliability and performance.

Intel's introduction of the 4004 microprocessor in 1971 marked the beginning of the modern processor era. This 4-bit processor contained 2,300 transistors and operated at 740 kHz—modest by today's standards, but revolutionary at the time. The 4004 demonstrated that complete central processing units could be manufactured on single chips, paving the way for the personal computer revolution.

The x86 Architecture Emerges

Intel's 8086 processor, released in 1978, established the x86 architecture that would dominate personal computing for decades. This 16-bit processor introduced features that became standard in subsequent designs, including segmented memory addressing and enhanced instruction sets. The x86 architecture's backward compatibility ensured that software investments would be protected across generations.

The 1980s saw rapid advancement in processor capabilities. Intel's 80286 brought protected mode operation and virtual memory support, while the 80386 introduced 32-bit processing in 1985. These developments enabled more sophisticated operating systems and applications, driving the growth of the personal computer market. Competition from companies like AMD helped accelerate innovation and reduce prices.

The Clock Speed Race and Multicore Revolution

Throughout the 1990s and early 2000s, processor manufacturers engaged in an intense competition to deliver higher clock speeds. Intel's Pentium processors and AMD's Athlon series pushed frequencies from tens of megahertz to multiple gigahertz. This period saw the introduction of superscalar architectures, out-of-order execution, and sophisticated caching strategies that improved performance without requiring higher clock speeds.

By the mid-2000s, physical limitations made further clock speed increases impractical due to power consumption and heat generation concerns. The industry responded by shifting to multicore designs, with Intel's Core 2 Duo and AMD's Athlon 64 X2 leading the transition. This approach allowed processors to handle multiple tasks simultaneously while maintaining reasonable power requirements.

Modern Processor Innovations

Today's processors incorporate numerous advanced technologies that would have seemed like science fiction just decades ago. Features like simultaneous multithreading, advanced power management, and integrated graphics have become standard. Processors now contain billions of transistors operating at nanometer scales, with leading-edge chips using 5nm and 3nm manufacturing processes.

The evolution continues with specialized processors for artificial intelligence, machine learning, and edge computing. Modern CPUs often work alongside GPUs, TPUs, and other accelerators to handle specific workloads efficiently. The rise of quantum computing represents the next frontier, promising to revolutionize processing capabilities once again.

Key Milestones in Processor Evolution

- 1947: Invention of the transistor at Bell Labs

- 1971: Intel 4004, the first commercial microprocessor

- 1978: Intel 8086 establishes x86 architecture

- 1985: Intel 80386 introduces 32-bit processing

- 1993: Intel Pentium brings superscalar architecture to mainstream

- 2005: Multicore processors become standard

- 2010s: Mobile processors revolutionize computing

- 2020s: AI-optimized processors and quantum computing research

The Impact on Modern Computing

The evolution of processors has fundamentally transformed how we live and work. From enabling global communication networks to powering scientific research, modern processors touch nearly every aspect of contemporary life. The continuous improvement in processing power, described by Moore's Law, has driven innovation across countless industries.

Looking ahead, processor evolution shows no signs of slowing. Researchers are exploring new materials like graphene, three-dimensional chip designs, and neuromorphic computing architectures that mimic the human brain. These developments promise to continue the remarkable trajectory that began with simple vacuum tubes nearly 80 years ago.

The story of processor evolution is ultimately one of human ingenuity and perseverance. Each generation built upon the successes and lessons of previous ones, creating an accelerating cycle of innovation that has reshaped our world. As we stand on the brink of new computing paradigms, it's worth reflecting on how far we've come—and how much further we might go in the decades ahead.